The Napkin Math Methodology for System Design

Two ways to use it:

- Designing systems

- Improving? Reality-checking?

I believe that first-principle thinking is required to break out of iterative improvement cycles and make order of magnitude improvements.

An illustrative example comes from SpaceX:

[..] physics teaches you to reason from first principles rather than by analogy. So I said, okay, let’s look at the first principles. What is a rocket made of? Aerospace-grade aluminum alloys, plus some titanium, copper, and carbon fiber. Then I asked, what is the value of those materials on the commodity market? It turned out that the materials cost of a rocket was around two percent of the typical price. — Elon Musk, via FS.blog

And he did indeed decrease the cost of a rocket by an order of magnitude.

We’ve solved over a dozen problems using the napkin math methodology. In this post, I want to zoom out and review the approach to serve as a high-level reference:

- Define. Problem, performance goals, and features.

- If you are designing for 8 demands it’s likely that 6 are easy, 7 requires serious contemplation, and that doing all 8 results in a worse system overall. Key lesson from system-design legend Jeff Dean.

- Let’s carry through an example: We have decided that a user can only have one active request at a time for throttling-related reasons. We don’t want this to take more than ~1ms per request.

- First principle model. Construct a first-principle model of the proposed

solution, starting with the simplest first. This can take minutes, or months.

It’s effectively designing the system.

- It is far easier to collaborate on a first principle model. At this level, all discussions are at the level of data structures, key operations, sources of complexity, and so on. It is good to separate this from at the database, programming language, or library you may use to execute those operations, which elegantly avoids those minefields.

- You may have to heavily instrument existing systems to inform the model / design. For example, average row bytesize, cache hit rate, flamegraphs, and/or response times of various subcomponents.

- I mean it when I say months. For one project, I spent months, lost, but I kept adding instrumenting and building dashboards until a simple solution to a complex problem evolved from the dashboards. For another, I had to read a couple of books, a dozen of papers, and tens of blog posts. For yet another, I had to sit with pen and paper for weeks to design a good data structure. Or write BPF-probes until something clicked.

- Good architecture is built incrementally, but not designed incrementally. The active-active, sharded architecture that powers Shopify today was drawn on a whiteboard in 2014 (not designed incrementally), and it took ~6 years to fully materialize, with a major customer-facing milestone every year (built incrementally). We would’ve hit a local maxima if we had not intentionally spelled this out.

- For our example, We’ve decided to figure out the performance penalty of acquiring a lock in Redis for every request. We’d draw a quick picture (paper or mental) of the network hops (same-zone), roughly what we’d expect Redis to do (random memory write, traverse TCP stack), and how many roundtrips we’d expect (2, one for acquire, one for release).

- Napkin math. Figure out the expected performance of the modelled system

using napkin math (possibly a simulation, if the math involves

probabilities). This is generally quick, unless a number isn’t available in the

napkin math reference, in which case you’ll need to obtain the

performance of that operation, e.g. matrix multiplication on a GPU.

- Following through the Redis lock example, we look up the operations in the reference: Two roundtrips to acquire and release the lock (~0.5ms each), two commands for the Redis server of ~10 s each, for a grant total of 1ms.

- Optimize. If the solution doesn’t satisfy your performance expectations, you will need to go back to your first principle model and move up a step on the complexity ladder. You’ll be able to re-use most of your existing work here.

- (Napkin math gap). If you are working on an existing system, and there’s a significant gap between the expected performance from the napkin math and reality, that gap represents and opportunity that needs to be resolved: Either there’s a room for optimization, or your first-principle model is wrong. When you’ve implemented your solution, you’ll go back and check too.

This process scales from building large-scale systems like a database to something as simple as how long a SQL query might take.

If you can’t reason from first-principle, you’ll reason from whatever happens to pop out. If you don’t have a strong mental model of your system, you won’t know how to analyze its performance properly:

The user analyzes performance by choosing observability tools that are familiar, found on the Internet, or just at random to see if anything obvious shows up. This approach is hit or miss and can overlook many types of issues. … named after an observational bias called the streetlight effect, illustrated by this parable: One night a police officer sees a drunk searching the ground beneath a streetlight and asks what he is looking for. The drunk says he has lost his keys. The police officer can’t find them either and asks: “Are you sure you lost them here, under the streetlight?” The drunk replies: “No, but this is where the light is best.” — Brendan Gregg, in Systems Performance

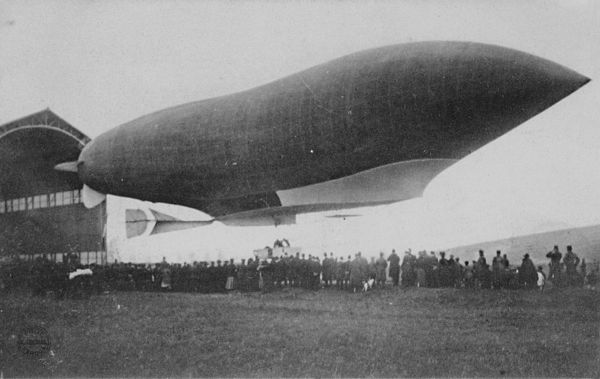

I will end with an observation by Wilbur Wright, famous by inventing the first ‘flying machine’ with his brother:

La Patrie [French state of the art Airship], was a giant, sausage-shaped gas bag with an open gondola for the crew hanging below. It passed over the Arc de Triomphe and almost directly over the Meurice at what Wilbur [Wright] estimated to be 15 miles per hour. He judged it a “very successful trial.” But as he was shortly to write, the cost of such an airship was ten times that of a Flyer, and a Flyer moved at twice the speed. The flying machine was in its infancy while the airship had “reached its limit and must soon become a thing of the past.” Still, the spectacle of the airship over Paris was a grand way to begin a day. — The Wright Brothers, David McCullough

Indeed, the flying machine was far more exciting because it was at the beginning of its potential, whereas the airship had limited utility, but had already exhausted its potential.

This is what I mean when I say that you need first-principle, napkin math thinking to break out of a local maxima: Are you iterating on an airship? Are you due to leap for the flying machine?

You won’t get order of magnitude improvements without first-principle thinking.

This may seem like an argument for always doing software rewrites for order-of-magnitude improvements. It’s not—but in some cases that may be what’s required. The argument is that you need to understand your problem and solution from first-principles to have a chance at them; rewrite or refactor is a judgment call once you’ve arrived at the model for what you need.

See the archives for examples of using this methodology.